It's no secret that generative AIs are taking the world by storm, generating both wonder for their technical prowess and disdain for ethical reasons. Stable Diffusion marked a milestone in the rise of AI imagery, being the first open-source state-of-the-art model released, in a relatively compact and performant package able to run on consumer-grade GPUs (whereas the other famous alternatives such as midjourney are closed-source and only available to compute on massive cloud arrays).

Stable Diffusion?

Stable Diffusion is a new “text-to-image diffusion model” that was released to the public by https://t.co/yo04zzpJXY

It’s similar to models like Open AI’s DALL-E, but with one crucial difference: they released the whole thing.

Beta https://t.co/FTFWSeVJlJ pic.twitter.com/uGhUS5cToP— Abtin Setyani — e/acc (@abtinset) August 31, 2022

And where there is open-source, there is Blender! The Blender community has wasted no time in testing Stable Diffusion as a texture generator, and implemeting it to Blender in various ways. Here are some examples that stood out to me:

CEB Stable Diffusion

Carlos Barreto is an add-on developer known for his great tools which often incorporate an AI component, including for motion capture right in Blender. His rapidly developing CEB Stable Diffusion add-on already has both txt2img (image generation via prompt) and img2img (image-based diffusion) implementations and is just generally a great way to have a complete build of Stable Diffusion on your machine.

CEB Stable Diffusion 0.2 wip (with img2img) pic.twitter.com/f8Y5Cc1cT8

— Carlos Barreto (@carlosedubarret) September 5, 2022

CEB Stable Diffusion 0.2 WIP

Multiple images generation and selector pic.twitter.com/Y9YRXoNeGo— Carlos Barreto (@carlosedubarret) September 3, 2022

Stable Diffusion as a Live Renderer Within Blender

Over on the Blender subreddit, Gorm Labenz shared a video of an add-on he wrote that enables the use of Stable Diffusion as a live renderer, basically reacting to the Blender viewport in realtime and generating an image (img2img) based on it and some prompts that define the style of the result. The whole thing is just incredible to witness and opens up whole new workflows of AI-Assisted look development.

I wrote a plugin that lets you use Stable Diffusion (AI) as a live renderer

byu/gormlabenz inblender

AI Suzanne

Still, on the subreddit, user "Renaissance_blender" shares a Stable Diffusion enhanced Suzanne, once again using AI as a renderer to the "basic" image prompt from Blender.

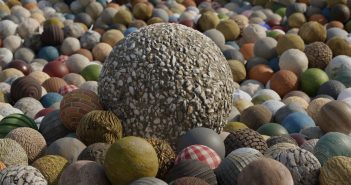

Texture Generation

And finally, here is an example of one of the most basic yet useful uses for Stable Diffusion: Texture generation, with infinite variations available just a few words away!

AI text-to-image generators are pretty good at generating images! Here's one I did with Stable Diffusion

byu/PityUpvote inblender

7 Comments

Hola, es un trabajo increíble y de suma importancia. Llevo algún tiempo pensando en cuán increíble será cuando podamos aplicar estás tecnologías de IA a nuestros flujos 3D. Cuántos beneficios, cuan ilimitado y eficiente resultaría el proceso creativo de los artistas 3D y gráficos en sentido general. Gracias por eso y felicidades!! Ver qué existen personas con ese nivel de compromiso es lo que hace al resto soñar

This is an international community - please post in English.

Mr POZO is awed with huge possibilitie offered CG artists by this new tech.

Thank you for being a good human

Right click and hit "translate to english" technology brings us together Alhumdulillah

Exactly - the polite thing to do would be to translate it before posting ;-)

in a perfect world, AI would make everything on the web appear in the native language of the viewer. Hey, LaMDA, get on that, please.