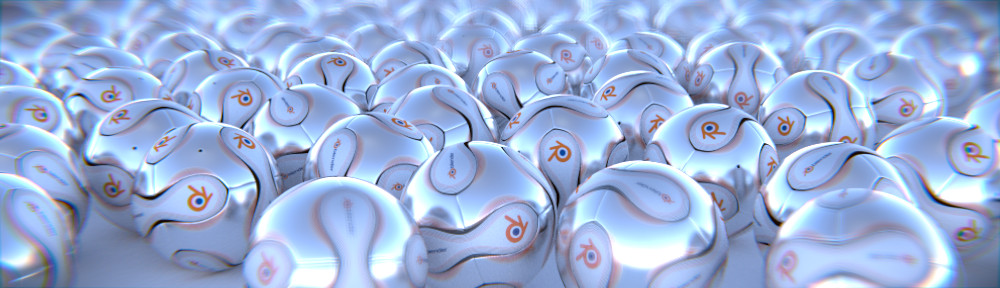

Theo Burt used Blender, Yafray, Python and Puredata to create a mesmerizing 'virtual life' simulation. I have always been interested in these simulations but I've never seen them visualized like this..

Theo Burt used Blender, Yafray, Python and Puredata to create a mesmerizing 'virtual life' simulation. I have always been interested in these simulations but I've never seen them visualized like this..

Theo writes:

I've just completed quite a large experimental sound/video film called 'gridlife.1', completely rendered with Blender/Yafray. The film comprises of one seamless 10 minute camera shot following a system which is displayed on an expanding grid of light bulbs. Entities in the system compete for resources with each other but require one another to survive, creating social behaviour including migrations and mass extinctions. The sound is also computer-generated and completely synchronised to the system, so that all entities on the grid can be heard at once from their correct directions, creating a true ambience. The film was developed over three months, using Python scripting to build scenes from the output of a realtime version of the system. The final video was rendered at ResPower farm using their extremely cheap blender subscriptions and making the budget of the movie virtually nothing.

The full movie can be downloaded at http://www.theoburt.com.

There is more information about the rules of the simulation available on the site. If you can, grab the full-res Xvid version (199MB) - the download is more than worth it. Yes, we did warn Theo ;-)

14 Comments

very exciting project. downloading...

Dowloading. An interesting application of python.

very cool, i enjoyed watching it, i would like to see how it was implemented.

That was pretty freakin' awesome! I have got to learn python.

I'm very interested in knowing how the camera motions were done. I've theorized about using something like Icarus to make "hand-cam-like" camera movements for something that isn't there by just pointing a video camera at a wall with a grid on it or something... but I'm the most confused how you got the camera to 'follow' things, and furthermore it was done while manipulating time which is even more mind-boggling.

Let me see if I can guess a process here...

1. Python simulates life via scripting beyond my comprehension. This information was "baked" into IPO form.

2. You later played with the world's "time" IPO to make things more amusing at specific points in time.

3. You rendered a rough draft of the film.

4 (1). You pointed a real camera at a screen playing the film with maybe some sort of grid overlay

4 (2). You pointed a real camera at a wall and did your best to guess where you were aiming.

5. The video camera footage was tracked and the camera movements replaced the static camera.

It took me a while to understand what was going on in this film, but by the end of it I had picked up on on the rhythms and it made a strong impact on me. I'd be interested in putting this in my spring-time back yard film festival. :)

perhaps epileptics should not watch this...

@Bmud:

My next tutorial will include an extremely simple way to create a handheld camera effect from within Blender, no scripting involved.

I personaly was impressed by real the lights looked.

See it - like it.

Kernon - i am very interest to see this tut.

The camera movements are looking absolutly realistic - great!

I'd like to see a tute just on creating the LED lights alone.

Great stuff.

I was mulling over how the natural camera movement could be produced.

It could be that the camera is fixed on the LED arrangement using a constraint, and then the constraint IPO is jittered / varied so that the focal point of the camera meanders from 1.0 (full constraint, locked movement) to say 0.8 (a slight amount of jitter) then you'd just add random movement IPO's to the camera and you'd have a camera that is largely focused on the object but also has a degree of free movement.

Huy guys, thanks for all your comments. Just to let you know, a realtime version of the system, producing the spatial sound, with 2-D graphics was built in Pure-Data. The camera movements were recorded then. The camera was a physically-modelled mass, so camera-jitter was automatically produced when you moved it. All data from PureData was captured over a single 'performance' of the realtime system, and this was read by Python Scripts which then assembled Blender Scenes from the raw data.

COMO BAJAR ESTE PROGRAMA TAN INTERESANTE

I'd like to see the .blend for this... this looks awesome.