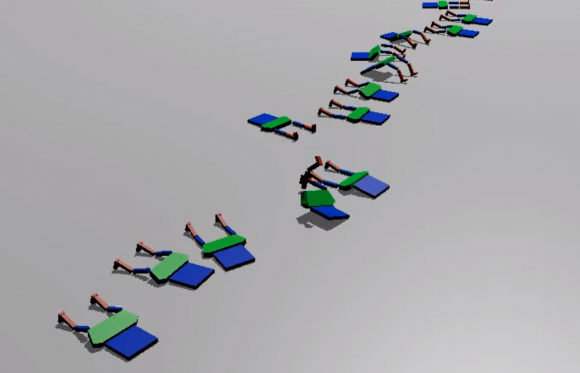

What do you get when you use Python and the Blender Game Engine to teach systems to perform tasks? Fascinating videos of creatures learning to walk, stumble and get up again. I love this stuff.

Jean-Francois Gallant writes:

After getting the result I want with Molecular and waiting for a "mesher" be available in Blender , I'm starting to have fun (and try something new) with Neural Network and Genetic algorithm. All my curiosity coming from my brother that talk to me about learning machine algorithm. I found a popular tutorial about this on internet. So from that , I'm starting to code a python module with Cython. What I learn is it's more difficult to find good parameters , right inputs to feed to the module and how to " reward " good behaviors then code the module itself. I get some difficulty at this time to understand all this the first time. I do a lot of try at each new type of simulation but when you start to get result it's very impressive. In all videos , all "players" learn by itself. No pre-programming behaviors. For example: in the pole balancing video , each players are rewarded on how long the pole stay straight and how far it's stay from the border. In p acman video , how many yellow point they catch and how far they stay away from ghost. For quadruped and biped video it's about how far they can go without the body touch the ground.

The goal of this is when you get the result you want , you are be able to save the trained network in a file and re-use it where you want (game) and not to have to training it again each time. For now saving and loading network in a file function is not avalaible ( but can be coded on python side ).

Here is a couple of link about my test about this.

Links

Video of biped try to walk

Video of quadruped try to walk

Video of carts and pole balancing

Video of pacmans and ghosts (co-evolution where the progress of one help to the other to progress further )

Video of tanks try to catch points

17 Comments

I like the quadruped one the best.

And now teach them to talk, think and meditate about the meaning of life! :)

Thanks for the tutorial link. But this also reminds me of how little we know about the workings of the human and animal nervous systems.

Looks like some parties I've been to. First, everyone is standing on two legs, then falling all over the place. In the morning, they're crawling back home.

Awesome, i've been wondering for a long time if someone was onto this AI area using blender.

Like about 10 years ago, there where several initiatives from people wrote programs that did amazing things.

Biots was one, and there where more, some where projects other where single man made programs.

I could watch for hours to them seeing them evolve. I cant find back those programs these days as virtual life got a whole new meaning (facebook/second life/ea..)

I wonder what are your plans with this.

I guess there still must a a scene of virtal live fans, around who are into building it like you did.

And who might extend your creations and ideas, or would like to work with you.

For now nothings special , I just did this for fun for now. Instead of trowing away my 2hours in public transport each day ( train ) , I use it to have fun like this. Doing some stuff that take my attention but probably never get time to test it somewhere else. Some little game exist on the system like you said.

the keywords you'd need to look for would probably be more like "Virtual Creature Evolution" or "Virtual Walk Evolution" or something along those lines. Virtual Life most certainly will not be helpful as you already discovered :)

I found this one research video once where they mentioned "novelty search" learning.

Basically, if you want your creatures to run far distances, you could very easily come up with "distance from starting position to end position" = score.

They, however, did something much simpler and it's rather ingenious:

Whenever your set ends its walk in some place new, some place where no previous instance has ended up, reward it.

Surprisingly, though maybe not THAT surprisingly if you think it through, this leads to better convergence and more stable walks than the default.

I don't know the details of this technique - like, what counts as a "new place". But I'd guess if you go for something like the bounding sphere and say that the bounding sphere of your current iteration has no overlap with any previous bounding sphere (this would just be a list of all new places already visited, and a check between all of them via the sphere radius), that should be good enough.

Ah, I read up on it now. A simple measure they are using is "sparseness" of the explored areas.

In case of a walker like what you evolved in the first two videos:

The novelty score of your walker is the average distance you walker ended up at from any previous walker.

So sum the distances of the endpoints of all previous walkers to the end point of the current walker and divide by the number of walkers.

Instead of telling the walkers "walk further", it tells them "end up somewhere where nobody else has been before."

High scores mean, the average distance to any previous walker is high, so the found location is particularly novel.

This should be even better than having to do a bunch of sphere collision checks.

Obviously you'll need to slightly change that definition if you want to keep your program as-is with all the walkers of one generation spawning in a line. Though all this takes should be to subtract the added offset at the start from the end position again, and then you can use the same formula.

not sure is the same thing you talked about. Never read about novelty search. Is in the family of search space algorithm? I use neural network and genetic algorithm. Neural Network is a series of inputs , ouputs and between them "hidden neurons". Each inputs is linked to all hidden neurons and hidden neurons to ouput. All this links is multplied by a weights, So when you insert value in the input , you get value come out the output and the output result depends of the values of the weights. So if you want a precise output results , you need to find the right value for all weights. Is impossible to do by hand for a walking characters or probably difficult for AI like the pacman or balancing pole videos. So is where the genetic algorythm come in the equation. All weights a set randomly at the generation 0 and walker try to go far away that they can with this value ( just falling for major case in generation 0). I attribute some score to the walker directly attributed by the distance he make. Genetic algorithm choose two mates to be reproduce by a "roulette wheel" , where highest score walker have more chance to be selected then lowest one. But lowest one have a bit of chance to , it's help to keep genetic diversity and avoiding to get your evolution stock to a "local maxima".So reproduction exchange there genetic data ( here the weights ) to get the next generation. Ounce the next generation is created , a small chance of mutation is apply on there weights and is ready to be tested again ( try if the new value can go more far then the last generation ). Alot of docs is available about Neural Network and Genetics Algo ( and many other seach space algo ). I want to share the link of the paper ... a more advanced system then mine : http://www.staff.science.uu.nl/~geijt101/thesis/index.html

Novelty Search is one search method for Genetic Algorithms.

You know how you would typically have the following GA Algorithm:

1) Simulate a bunch of creatures

2) Rank them on a fitness score

3) Assign chances of reproduction based on fitness score, generate a set of offsprings accordingly

4) Randomly mutate offsprings a little

5) back to step 1 using this set of offsprings.

A typical fitness function for having 3D virtual creatures, like your bipeds or quadrupeds, walk would simply be distance-based.

Something like "euclidean distance from start- to end-point", usually.

Novelty search would proceed differently:

It would assign a score based on how novel the behavior is.

In case of this same walking example, you would store a list of all ever-reached endpoints, relative to the starting points of your creatures. (In practice you can get away with storing only, like, the 100 or so newest behaviors, in case "all behaviors" is too much)

Then, as a fitness score, for every creature you would determine the k-means of that behavior over the list of stored behaviors.

That is, you average over the k values (where k is a chooseable parameter) that are closest to the value you get as end-position for your current walker.

If this average is small, that means a lot of walkers have ended up close to the very same spot.

If it is large, very few walkers have.

That means, initially, walkers get rewards for EVERYTHING. However, very soon, "falling over from stand-still" will have been done by a lot of walkers who, thus, no longer receive rewards. Only those who find a different way to end their run will keep being rewarded.

Somewhat surprisingly, this tends to converge to more stable walkers much more quickly than the fitness based approach. - Apparently the reason for this is, that fitness based approaches initially give very high fitness to falling over (the walker that can fall over in a fashion such that it lands most distant from the origin will receive most fitness), which means that balancing is not at all encouraged.

Because novelty search only looks for change in the position rather than directly for distance, it will quickly stop favoring those that fall and fitness growth will tend to be significantly higher.

All other steps, besides Step 2, are left alone unchanged.

Here is a really long paper on it - the vast majority of it, though, is just tests on how things change if you apply this technique (or one of the variants given towards the end of the paper) - I already described to you how it works, though this paper elaborates on it further, but if you want, it's sufficient to just skip to the various graphs in this paper. They pretty much summarize the whole document.

http://joellehman.com/lehman-dissertation.pdf

Also try to just put "novelty search" into YouTube - there are tons and tons of talks about it. As far as GAs go, it's a pretty new technique but its popularity is growing pretty rapidly.

upon further inspections, never mind: there are not a ton of videos on YouTube about Novelty Search. Still, there are some.

no problem , you give me enough hints to thinking about this. I don't know if I have the skill to implement this but I will check. If I understand , my system stay the same , it's just all about how I give them rewards. Did you already study in machine learning ? You seam to have some knowledge in this subject. Thanks again.

Wow ! Thanks about this info ! Not sure I understand everythings for now and how to apply this but you give me a got start for my research about this! Thanks again !

What exactly you mean by "you would store a list of all ever-reached endpoints, relative to the starting points of your creatures". I need to store all ( or last 100 like you said ) distance of all creatures ? Each creatures have is own list and k-mean or it's a global list for all creatures ? thanks

I did visit (but never finished) the coursera course on artificial intelligence by stanford.

Beyond that, I mostly just read reserach papers on the topics of genetic algorithms and neural nets, because I'm really interested in them.

So I don't think you should consider me an expert, but I know at least a thing or two about those topics.

You are correct, all that would change is your reward function.

About the List you store:

You could think of more complicated variants, but for the current task at hand, evolving walkers that walk as far as possible, this is a simple method for implementing novelty search:

For each walker:

Your walker started at point (x0,y0), when the simulation began.

Your walker ended at point (x1,y1), when the simulation ended.

So you take the difference:

(x1-x0,y1-y0).

THIS value, these two coordinates, you store in a list. It describes the behavior of your creature.

If you want the "full" version, you'd need to store this value for each and every creature you ever evolve. As you get into the thousands of generations, that list would get very long.

But in practice, it suffices to store the 100 or so most recent values.

So you would have one creature that walks, say, from (1,2) to (5,3).

What you store for that creature would be (5-1,3-2) = (4,1)

Do this for the most recent values.

Once you have such a list, the next step is to compare your current creature to the values in that list.

Say, the behavior of your particular creature is (2,2).

Find the k behaviors that are closest to that value. For simplicity, let's assume k=3 (in practice, this should probably be higher.) and you have the following list:

(0,0)

(5,7)

(1,2)

(2,1)

(3,1)

(0,4)

(2,3)

To find the "distance of the behaviors", you just find the distance between the vectors:

(0,0)-(2,2) = (-2,-2)

(5,7)-(2,2) = (3,5)

(1,2)-(2,2) = (-1,0)

(2,1)-(2,2) = (0,-1)

(3,1)-(2,2) = (1,-1)

(0,4)-(2,2) = (-2,2)

(2,3)-(2,2) = (0,1)

Now you take the length of all those vectors:

||(-2,-2)||^2= 2^2+2^2 = 8

||3,5||^2 = 3^2+5^2 = 34

etc.

and your closest 3 behaviors turn out to be:

(1,2)-(2,2) = (-1,0) => 1

(2,1)-(2,2) = (0,-1) => 1

(2,3)-(2,2) = (0,1) => 1

Take the average of those values

(1+1+1)/3 = 1

And THIS now is the "fitness score" of your creature. - Note that it relies on the history of all previous creatures

Obviously, I took super easy values. You'll likely get something very different when you run the actual simulation.

The idea is, that if a lot of walkers cluster at a single point, they are too similar to be interesting. You want to find those walkers that try something new. The outliers. (Since, in Genetic Algorithms, typically the norm isn't success)

If the k-means (I here used the 3-means - the average of the three closest values) is large, that means, even the closest creatures are rather far away. If it is small, that means a lot of other creatures had almost the same behavior.

I hope that helps!

I now also read up on the ANN side of things.

I assume you have a fixed topology network where you genetically directly encode the strength of each connection? If that's the case, there are some real limits to this.

This is much more complicated than Novelty Search, but walkers are a surprisingly hard problem so you might actually need this to get your walkers to really walk:

Look up the NEAT-algorithm and its improvements, HyperNEAT and HyperNEAT-ES.

With those I'm not quite sure how to implement them, though there is a lot of information about them online. They allow you to evolve Neural Networks with increasing complexity.

There are lots and lots of papers about them online - try Google as well as Google Scholar.

And at least for NEAT and HyperNEAT, there also are a number of talks and demonstrations on YouTube. Those are the state-of-the-art methods for evolving creatures today.

The ideas behind them are fairly simple but I'm not so sure about the exact details.

Check it out and good luck!

Oh, a lot of papers and presentations also have associated freely available sourcecode in various languages. If that's easier for you, perhaps you could find some of those and port them to python/bpy. (I didn't see any that already are in python. One I found is SharpNEAT, written in C# - here a starter http://stackoverflow.com/questions/501465/any-good-sharpneat-tutorial )

Just in case you are wondering: The course I mentioned (the first in this list), as well as a lot more on the same or similar topics:

https://www.coursera.org/courses?orderby=upcoming&search=artificial%20intelligence

the last time I checked, those pretty much exclusively were about static neural networks. No evolutionary algorithms beyond mentioning them as a side note.

Though the courses are great and as long as you don't want a certificate, enrolling is completely free.

And even if you don't have the time to go along with the courses, you can always just enroll anyway and watch the videos on the various topics. Failing a course doesn't matter at all - just retry it next time (if you even actively attend)

I highly recommend it.

There are also the other free sites with a similar payment scheme (pay if you want an optional certificate, attend for free) but I have way less experience with them:

https://www.edx.org/

https://www.udacity.com/

Still, one hears good things about them.