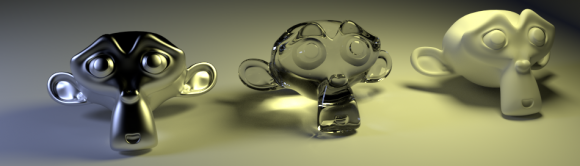

Help compare GPU speeds with the Blender GPU Benchmark.

Sidy Ndiongue writes:

I have started a blender gpu benchmark in cycles to compare the performance on different gpu's in cycles.

I have created a benchmark that compares different gpu's in cycles. The benchmark is currently Nvidia only because Opencl is not fully inplemented. Multi gpu support will be avalibe onece it becomes a full feature.

How to use it

Links

49 Comments

When will ATI cards be supported? Half of the community is essentially being alienated here when they don't have an Nvidia CUDA card. Please create wider support for GPUs instead of adding tons of fun features for the 20% of the community that have a supported card. Thank you.

it's not blender's fault. read some of the forum threads about it. opencl is behind cuda feature wise and aditionally amd's drivers are buggy.

So is it 'Half of the Community' or '20% of the community' or some other random and self serving percentile?

I guess I'll make some broad sweeping statements with little to nothing to support my point other than my own little ideas of how the world should run too :

Anyone that is a serious content creator uses an Nvidia card. Generally what informed, intelligent people do is that they make their purchasing decisions based on one's requirements and the capabilities of the item to be purchased. Not demand that other people bend over backwards to to cater to their means.

Well... that's what 20% of the people do at least.

So those who use AMD cards are not intelligent and it could not be because it is what they use as a company, and have no control over those decisions. No, they must be ignorant and clearly not very good when it comes to being a 3D Artist. You should continue to make broad sweeping statements with little or nothing to support your points. You're an ignorant and pathetic tool.

Oh, I'm sorry... You work for a company ! Goodness me. I had no idea. Well of course Cyles working on AMD GPUs should take top priority. Stop all other development and let's just get this nut cracked! C'mon people ... We got a guy that works for a COMPANY over here !!!! Lord knows that companies never make bad purchasing decisions and that every man-jack what works for a company is paragon of perspicuity. And I just bet your a fantastic 3d artist to boot.And while you're at it... I got these Matrox boards...

I for one am very excited to see some "serious content" of yours, mister Man, sir. If you would be so kind, i'm sure we could all learn a hell of a lot from you. If not 3D related, at least some interesting communicational skills, that will surely get us far in life.

Gman, you are possibly the most ignorant and arrogant person i have ever had the displeasure of having to reply to, what you say about people not being intelligent for choosing AMD is a load of bullsh!t, i have a £2000 extreme workstation that i bought specifically for 3D modeling, and its all AMD stuff, but because i spent so much i got extremely powerful hardware, much higher than i could get from Nvidia/intel for the same money.

By the way, if you want to know if i can create good models have a look at the image below, its my most recent one, not finished but good enough to shut you up.

just everyone known im not a fanboy of AMD, i just prefer to spend my money on what will suit my needs more effectively, i also have another computer which is an Intel/Nvidia build.

Bizla I do agree that this guy is a

Please Insert Bad Word Here_____.

however I have looked into why ATI is not yet supported

and it seams that nvidea has some sort of technical advantage over the ATI cards

which is making it difficult for the developers to support the ATI cards

i would personaly love to be able to use my gpu for live rendering but it is an ati so i feel your pain

20%... Don't be silly...

People serious about GPU rendering chose their configuration in consequence.

Blender is not a toy.

I agree. Vendor of professional software also often have quite limited specs. Autodesk inventor used to be quite picky about video hardware.

Getting a new videocard costs nothing compared to license costs. This is why people don't complain when it comes to proprietary software. With blender we are used to getting good stuff for free. Now, we will have to invest. I did. I just installed a gtx 550. It renders about 100x faster then my poor cpu.

We need to poke AMD harder. OpenCL is not as mature as CUDA. That's the main reason.

More than 100 Users already collected data on this over at blenderartists.org

https://docs.google.com/spreadsheet/ccc?key=0As2oZAgjSqDCdElkM3l6VTdRQjhTRWhpVS1hZmV3OGc#gid=0

I cannot choose nor CUDA nor OpenCL. My Blender says only Experimental or Supported.

Did you chooce cycles render?

Did you enable gpu rendering?

Then cuda should be selectable.

What Roeland says.

in newer builds you have to go into user prefs/system and set the cumpute device (bottom left) to CUDA

Here is our wiki page about GPU render state:

http://wiki.blender.org/index.php/Doc:2.6/Manual/Render/Cycles/GPU_Rendering

Note that for similar openCL render engines (V-Ray RT) the same limitations apply. Cycles specifications are just really demanding (a core does a complete trace and shading, where other render systems only raytrace on GPU). We can only wait for ATI/AMD to catch up - which they promised they would.

-Ton-

Promise they would... when exactly? I'm a big fan of ATI, i feel they offer a lot more bang per buck, yet i might consider upgrading my 4890 somewhere down the line and would like to know how things stand. Can we expect this catching up to be done within give or take 6 months or are we talking about a year (or two)?

4890 wont work.It is nvidia who developed GPGPU, not amd. They are several years behind.

AMD can do OPENCL... Ton just said they promised they'd catch up ("We can only wait for ATI/AMD to catch up - which they promised they would." - Ton). Hence my question.

I'm aware the 4890 doesn't work, because i have it and... well, it doesn't work.

Ton rocks !

Great idea!

But the CPU does not interfere with the benchmark result?

Yep, thats really a shame, we have a gret benchmark already there: http://blenderartists.org/forum/showthread.php?239480-2.61-Cycles-render-benchmark Why start over again?

because a forum thread is not always the best medium for viewing this kind of thing

I hope that their (AMD/ATI) support will come early with their new 7970,7950 cards or my 6950 will fly to a trash can :)

ill take your 6950 then i can run crossfire. :-)

I like this benchmark better than the other one. The spreadsheet just seems messy and the render time is a bit low.

Better keep track of the operating system as well. The other benchmark shows that linux systems render considerably faster than windows.

if this is the spreadsheet it is already messed up,

https://docs.google.com/spreadsheet/ccc?key=0As2oZAgjSqDCdElkM3l6VTdRQjhTRWhpVS1hZmV3OGc#gid=0

because some users post times such as 3.1 some stick to this format: XX:XX:XX and some do it right writing XX hrs XX minutes

which you can fully understand.

please stick to a universal time format and then post it with the table ; e.g. Rendertime CPU [hr:min:sec]

It should not take much time to write a Python script that automate a bunch of tests, and then send the results through a POST to some web service. If I had the time I would definitely do it.

maybe you should add a viewport benchmark, because thats where NVIDIA fails big time.... i havent even looked at the results yet but i expect the worst performing viewport cards to perform best in cycels...

as expected. if someone buys a GPU because of this list he will have the worst blender experience he can get except for rendering.

Anyone have problems using a NVidia 3200 Quadro? It renders fine, just isn't any faster than CPU even though it has 192 cores and the latest NVidia drivers.

I have a Quadro FX 580. Doesn't work at all. Shader model 4 is not recognized. Says 1.0. There is some fixing to do, obviously.

CUDA will last for 3 years... top. After that, OpenCL will take over, because more companies are investing in this technology. Look it up. Of course because of CUDA boom, is better to have Nvidia card and 3 years is a lifetime for graphic card, so i understand why developers are focused on CUDA.

maybe or maybe not though for right now the APIs in OpenCL do not exists yet for the developers of Blender to take advantage of thus more work is being done on CUDA the on OpenCL. The current projects i see happening are actually neither as both are on hold at the moment anyways for OpenMP to be integrated better and for new independent features separate from CUDA and OpenCL. Personally yeah i would like to see OpenCL surpass CUDA in what it can do and in speed as then i will be able to get the faster AMD cards with more Graphics RAM as nVidia right now doesn't have as much speed or Graphics RAM on the cards as AMD which most likely will change soon to be the other way around again.

Thanks for these banchmarks! Although I'm not sure how useful they will be.. I see 2 benchmarks for EVGA GeForce GTX 560ti, one with a render time of 5:30 the other 9:53, this is a huge difference in time for the same video card. I think it would be more useful to include more information with these benchmarks, CPU, OS, video and ram memory-type etc..

@Roeland Janssens "20% of the community that have a supported card"

Actually majority of grapic artists use nvidia, not AMD.. for many reasons, good linux drivers is one of them. AMD is great for games, entertainment.. So Roeland, I guess you have to decide if you want to play games or create 3D graphics? In the 90's I had a choice once between 3dfx and 3D Labs, to me the choice was obvious.

Anyway, nvidia is awesome for games as well, so it's a win win! :P

Eventually, openCL will be implemented, open standards are great.. :)

"

I see 2 benchmarks for EVGA GeForce GTX 560ti, one with a render time of 5:30 the other 9:53, this is a huge difference in time for the same video card."

Actually, one of those is an EVGA Geforce *550* Ti.

No, there were TWO "EVGA GeForce GTX 560ti" benchamrks, one with a render time of 5:30 the other 9:53, but after I made the post here they removed the 9:53 time benchmark.

Wow, what a crappy renderer, it took m8:s34.18 rendering CUDA and h1:m4:s46.87 with my CPU's.

My system is 16core dual Opteron 2.3ghz, 32gb ram and a Quadro 4000 video card, by what I've seen on the results sheet my machine is slower than the slowest. When I am rendering with Softimage or Maya my CPU's are up at 100%, but with Cycles, the average system load is less than 25% max.

Not very impressed!!!

By changing the number of threads from 16 to 64, the CPU render took m12:s11.44, seems a bit strange to me....

the stable release of blender does not support multiple CPUs you will need to download a custom build from http://www.graphicall.org/blender with OpenMP enabled or compile your own using a compiler which supports OpenMP from getting the files from the SVN URLs of:

https://svn.blender.org/svnroot/bf-blender/trunk/blender

https://svn.blender.org/svnroot/bf-blender/trunk/lib/win64

https://svn.blender.org/svnroot/bf-blender/trunk/lib/mingw64

then compile them using Cmake or SCons remembering if you are using the C++ 2008 or C++2010 environment to compile it then you will not have support for OpenMP.

So try getting or making a version of blender with OpenMP as that will have a better result as you have 2 CPUs and Blender without the use of OpenMP can only use 1 CPU not both even though it can use multiple cores.

By changing the number of threads from 16 to 64, the CPU render took m12:s11.44, seems a bit strange to me....

does anybody with two or more SLI Cards try Cycles render??? new GeForce GTX 590 has a 1024 CUDA cores. So can we use 2048 or 3072 cores? And does anybody know why the Best GTX card has 1024 cores and best QUADRO only 448 ???

I hate to break it to you but right now cycles doesn't support multi gpu rendering...

Actually that is wrong. If you check out the source code there is a MultiDevice class that abstracts any number of devices. It doesn't work (quite) right yet but most of the multi-gpu code is there.

You guys freaking out about CUDA should relax a bit. Cycles works on OpenCL right now. Check out the source and build it! It's not finished, but it's 90% working.

I believe you should ask your users to also supply their CPU model. From the looks of the results, CPU does have a non-negligeable effect on the score.

For example, my Geforce 560 Ti runs on a Q6600. Scores for a 560 Ti in your database vary from 4 minutes to 6 minutes, that's a 50% range! That size range is improbable with the same graphics chipset and memory. Whether the graphics card is run on a celeron or an i7 should and will also make a difference, as the GPU only does a part of the processing.

I agree and that is why i am going with a Geforce 620 but with a i5-3330 as the CPU will have to compile blender before i can use it and even though the 620 has less CUDA cores then the 560Ti they are asuppose to be as good as double that amount from what i have read on nvidia sites. In that the better CPU will enable better importing and exporting from the scripts in 2.64.9 SVN build